Linux tools – developer's basics

When the project hits the maintenance phase it usually means that the system is already operating in a production environment and the main development phase has been finished. During this period and especially in its early stages, the focus changes from delivering new features to fixing bugs and implementing overlooked edge cases. These might be an effect of data flows that were not fully simulated during testing to match the production data. These cases may be hard to reproduce especially when the information coming from the bug report is not detailed enough.

Implementing proper logging inside the application allows future maintainers to quickly identify the source of the problems. Even at later phases, it's much easier to provide business support for complex projects when additional systems are getting integrated. But to make full use of logging it's crucial to have a solution for indexing and searching through logs. One popular choice here is the ELK stack (E – Elasticsearch, L – Logstash, K – Kibana). This combination provides a way to aggregate logs from different sources, transform them to JSON format, search, filter and visualize.

Unix tools on Windows

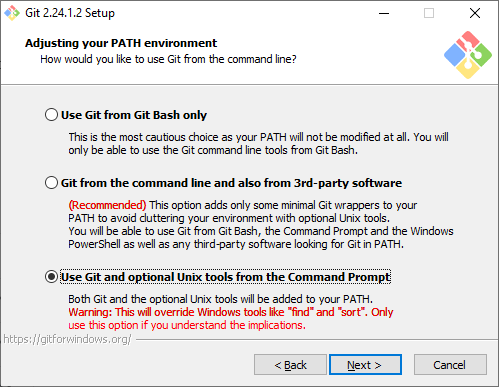

Sometimes we might not be lucky to have such a solution at hand, or maybe the situation requires looking at the raw logs. In such a case, everything you might need is a Linux environment or just some basic tools it provides. If you're running Windows – all is not lost yet. You most likely have installed a Git. During the installation, you get prompted for the optional installation of Unix tools. If you haven't checked that – you can still update your Git by running the newest version installer without any side effects.

I use the third option, but as the warning says, it can cause different behavior than the default (Windows one) due to overriding some of the commands. In case you detect a problem (for quite some time I haven't encountered any yet) you can just remove them from the PATH, but if you're still doubtful it's also fine to select the second option. I prefer the third option due to a much better sort tool. Another nice thing is that Git Bash shell comes with an SSH so you don't have to install additional clients like PuTTY or WinSCP.

Now we should be able to get into the Bash (it's in the PATH):

If the remote machine is running a Linux system, you might be tempted to skip this installation. However, depending on the environment, some tasks like data post-processing might be better run on your local machine to reduce the resource usage of the server (disk space, RAM and CPU usage).

Useful tools and commands

Utilizing the commands and tools introduced below, might not be trivial if you haven't had the chance of interacting with Linux environments, but don't give up. In the long run, mastering them will increase your efficiency greatly. For the time being let's assume the familiarity with some basic commands (ls, cd, pwd) and knowledge of the difference between home directory (~/ or /home/username/) and the root directory (/). So, without further ado:

| Commands | Description |

|---|---|

ssh user@hostname

The authenticity of host (...) can't be established. |

The first time you connect to a remote host you might be prompted with RSA key fingerprint and request for confirmation. Ideally, you should compare this fingerprint with the one you've been provided with to make sure you're not connecting with an imposter. After that, you will be prompted for a password. If you require an automated (scripted) login, you would need an SSH key or some additional tools. |

ssh -t user@hostname "top -n 1 | head -n 5"

ssh -t user@hostname "top -n 1 | head -n 5 > top.log"

ssh -t user@hostname "top -n 1 | head -n 5" > top.log

|

This is a basic way of running command on an SSH server instead of opening the shell. Quotes are used to demark the part which will be run on the remote. Spot the difference between the third and the second command which will result in a log being created locally or on a remote. The -t parameter is used to satisfy the top command which is a screen-based program.

|

scp /path/to/file user@hostname:/path/to/destinationscp user@hostname:/path/to/file /path/to/destination

|

SCP program lets you copy files to (the first command) and from a remote (the second one). Be warned that scp will overwrite the files if you have the write permissions.

|

gzipExample: ssh -t user@hostname 'gzip -c large_logs.txt' | gzip -cd

|

A tool to compress the file with an option to write it on a standard output-c. You may want to do that before transferring it over SSH to lower the size for transfer.

|

[z]cat

|

Basic command for displaying the contents of the file[s] to the standard output. With z prefix, it supports compressed files (e.g. zcat logs.gz). Together with other z-prefixed commands, it may use /tmp directory to perform the operation and as other commands, it also supports glob patterns.

|

[z]diff

| Compares files line by line and supports multiple parameters. If you want to compare the lines regardless of their order in a file or json lines this tool might not be sufficient. Awk and Jq might do a better job here. |

[z][e|f]grepExample (ignore case, include 10 preceding and 20 following lines): zgrep -i -B 10 -A 20 'error' logs.gz

|

This is probably the most popular tool to search through a file for a pattern in line. The differences between grep variations are subtle:

|

[z]less

|

Similar to [z]cat – displays contents of a file but in a format that fits the screen. The program allows for scrolling and provides a lot of commands. It is useful for an on-the-fly search of a request by e.g. client id and then looking up associated response unknown number of lines below. For a multi-file it can be done as follows:

|

[z(1)(2)]head[z]tail

|

These two command show first/last -n lines of a file. They might come handy to check the dates of logs for unnamed/concatenated files.

|

cutExamples (1st prints b, 2nd: b c): echo "a b c" | cut -d" " -f2echo "a b c" | cut -d" " -f2- | Useful for extracting selected columns split by a specific delimiter. |

trAn example which translates abc to dbc: echo "abc" | tr a d |

A tool for replacing or removing -d characters.

|

sort

|

A basic command to sort lines. Supports many cases like dictionary sort -d and numeric sort n.

|

uniq

|

With input usually piped from the sort command, displays distinct lines, allows for counting occurrences -c and displaying only unique lines -u.

|

xargsExample: find /tmp -name log -type f -print | xargs /bin/rm -f

|

Allows for building commands from the standard input which is one of the ways in which you could automate the case mentioned during zless description.

|

split |

Can be used to split a text file into smaller ones based on number of lines -l or size -b (e.g. split log.txt -b 200MB).

|

sedawkjq

|

These three tools are more sophisticated and unless you have to process some data, you probably won't need them, which is why I won't go more into detail about them. Sed is a stream editor that provides an easy way to transform text lines – sed 's/original/replacement/g' log.txt is an example of text a replacement.Awk is more of a scripting language which resolves similar problems but is much more powerful. Jq is usually not a part of a Linux distro but it excels best in processing JSON files. |

The last group of tools that might not be that useful for software developers but are critical for maintainers and performance engineers:

|

Glob

With most of the commands that can have multiple files as input, you can use glob patterns. They might look similar but don't mistake them for REGEX.

These patterns use wildcards *, ?, and […] making our lives a bit easier. It's useful for multi-node servers, especially when there is a shared directory or in general when we just want to search through multiple files.

Piping and redirection

Every program you run in the shell may have 3 streams STDIN, STDOUT and STDERR. The following symbols allow for:

>an output redirection to a file>>an output appendance to a file<an input from a file2>an error redirection to a file|an output redirection to another program

History

One final tip for situations where you maybe have multiple environments with different applications and might forget the location of the directory where logs are stored or a specific command. There is usually a Bash history saved in a ~/.bash_history accessible using history command. You can check it for the recent commands, maybe something will ring the bell.

Summary

Learning Unix tools cannot be considered as a waste of time. The number of situations they might come handy is enormous and it includes:

- finding more details attached to the logs based on some id/exception/timestamp

- downloading log files from a remote server for complex local processing

- processing data into the desired format

- comparing two files and computing unison or difference

- preparing some simple statistics (e.g. error occurrence)

- quickly searching through compressed log files

- displaying parts of the file which might be hard to load at once in an editor

- aggregating logs from different services/nodes/servers

- getting by on the environments with no access to any advanced tools

If you find yourself repeating some sequences of actions, it's might be a good indication that they can be automated and extracted into a script.